Content

With the development of technology and the changing demands of online audiences, content is evolving as well. In the past, we competed over keyword density; today, the success of content depends on its ability to be clear and helpful for AI systems, such as Google AI, Perplexity, and Claude.

Promodo experts explained how to optimize content for LLMs, why AI systems evaluate it differently than traditional search, and how to move from outdated methods to creating content that will be indexed and cited by artificial intelligence.

From the very beginning, the core principle was set: search results should correspond to the user’s query. The logic of selecting an answer (a website page in search) was quite primitive, which made it possible to manipulate the rankings. At that time, it was enough to add more keywords to the text or even spam the keywords field — and just like that, you were number one in the results. This was fixed rather quickly.

Vector models used by Google:

- GloVe (Global Vectors for Word Representation) – a word embedding model developed at Stanford University. It creates vector representations of words that reflect semantic relationships, taking into account co-occurrence frequency correlations in a matrix.

- Word2vec – one of the natural language processing methods. The word2vec algorithm uses a neural network model to learn word associations from a large text corpus.

- and others.

Google has built a complex system of machine learning and numerous algorithms that function and evaluate content independently, while constantly influencing one another.

2011: Fight against “content farms” (Panda Update) – the first blow, when simply adding keywords was no longer enough.

Google Panda is part of Google’s search algorithm designed to downgrade websites with low-quality, duplicate, or spammy content. The main goal of the update was to filter out sites that didn’t add value to users and reduce their visibility in search results.

2015: Introduction of RankBrain. A machine learning system that helps better understand user queries and page context. In fact, this can be considered the first step toward the future of artificial intelligence.

2019: BERT Update. Google introduced BERT (Bidirectional Encoder Representations from Transformers), a model that allows for a deeper understanding of the context of words in queries. The key difference was that simply adding keywords was no longer enough — one now had to “predict” the user’s final intent behind the search query.

2023: Helpful Content Update. From this point on, the era of search and detailed evaluation of overall content quality begins, rather than just keyword usage.

This shaped the following algorithm for analyzing and identifying “ideal content” for the search engine:

LSI is a method used by search engines, including Google, to understand the context and meaning of text, not just count keywords.

The GPT-3 system was introduced in 2020 and, within just two years, became available to mass users.

We no longer “optimize” — we “engineer.”

— Mike King (iPullRank), SMX Advanced 2025 Conference

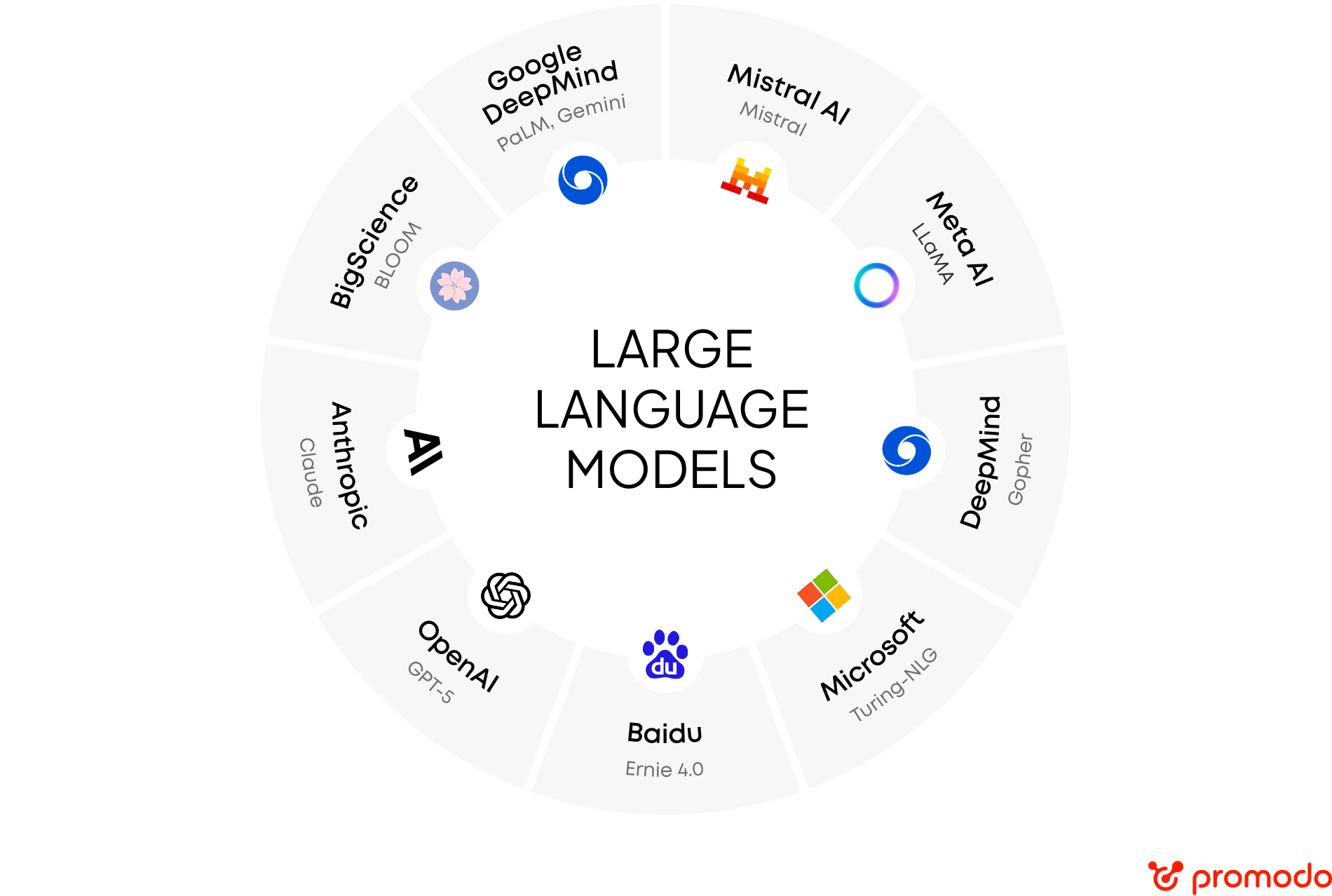

Today, LLMs are offered not only by major players but are also available in custom-built formats for individual companies, businesses, or even users. You can generate text, images, videos, or simply maintain communication in chat.

Different models have different operating logics and use various sources of information for training. Let’s look at some examples to help you better understand how AI works from the inside.

Being the intelligence for AI means being the source of its answers.

— Will Scott, SMX Advanced 2025 Conference

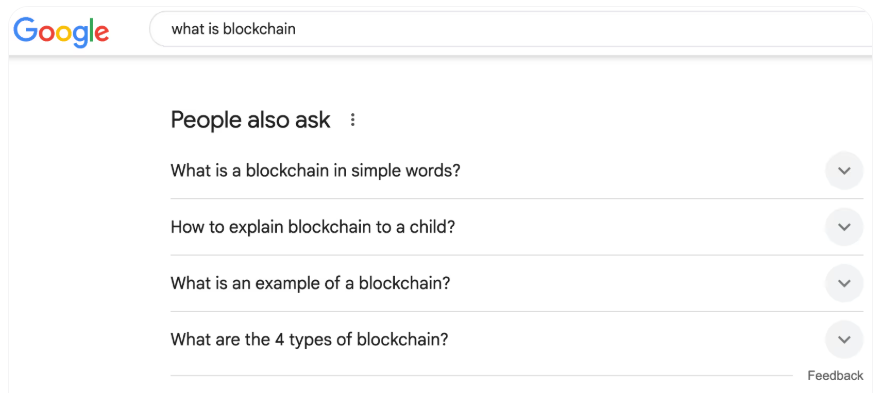

Google confirms the use of the “query fan-out” technique in AI Overviews: the system launches multiple parallel queries to construct a single response. This means that for a single query, your page has a chance to be included even if it is not exactly relevant to the original query — the key is that it answers the sub-queries. This hypothesis was confirmed by the DEJAN experiment.

“Query fan-out” is a technique in AI systems, particularly in search systems, that involves breaking down the user’s initial query into several related sub-queries.

If you want to understand exactly how it works, it is recommended to review Google’s patent.

A recent study by Metehan Yesilyurt identified 59 ranking patterns used in Perplexity, along with cryptographic schemes for content evaluation.

However, it is worth noting that some aspects of this system may remain closed or insufficiently verified due to limited access to the details of its internal workings.

Perplexity uses a complex three-level (L3) re-ranking system for object retrieval, which allows it to fundamentally alter search results. The system includes security mechanisms that can completely discard result sets if they do not meet quality thresholds, ensuring that users only see highly reliable matches.

Let’s take a closer look at the parameters that have been identified and how they affect the content evaluated by the AI-powered search system.

In conclusion, the L3 re-ranking system does more than just review the generated results — it can completely reject search results based on their substance if there is no quality verification.

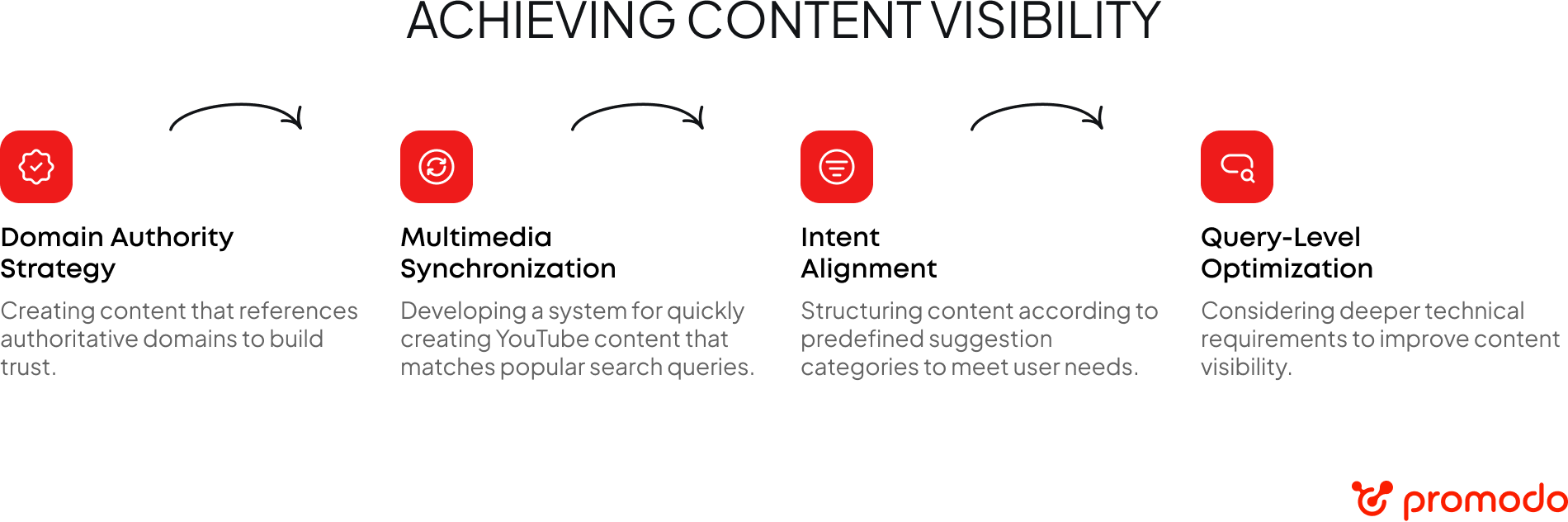

Successfully displaying your content requires not only keyword optimization but also topical authority and quality signals that satisfy machine learning evaluation.

The researcher also found that the Perplexity ranking system includes manually configured authoritative domains.

Key authoritative domains by category (not a complete list):

amazon.com, ebay.com, walmart.com, bestbuy.com

etsy.com, target.com, costco.com, aliexpress.com

github.com, notion.so, slack.com, figma.com

jira.com, asana.com, confluence.com, airtable.com

whatsapp.com, telegram.org, discord.com

messenger.com, signal.org, microsoftteams.com

linkedin.com, twitter.com, reddit.com

facebook.com, instagram.com, pinterest.com

coursera.org, udemy.com, edx.org

khanacademy.org, skillshare.com

booking.com, airbnb.com, expedia.com

kayak.com, skyscanner.net

A strong connection has been found between the Perplexity and YouTube platforms: when YouTube videos use titles with exact matches to trending Perplexity queries, they gain significant ranking advantages on both platforms. Check the experiment results yourself here.

To form the ranking of content that will be included in results, a complex system of categorizing user intent is used:

Content that matches these pre-programmed suggestion categories gains better visibility since it aligns with predefined valuable user intents.

Thus, the content goes through the following verification:

It is important to note that these content verification stages involve manual configurations and predefined optimization patterns.

Here is a summary table of the ranking factors:

A detailed study of the factors and their impact can significantly help you improve your ranking in the Perplexity system.

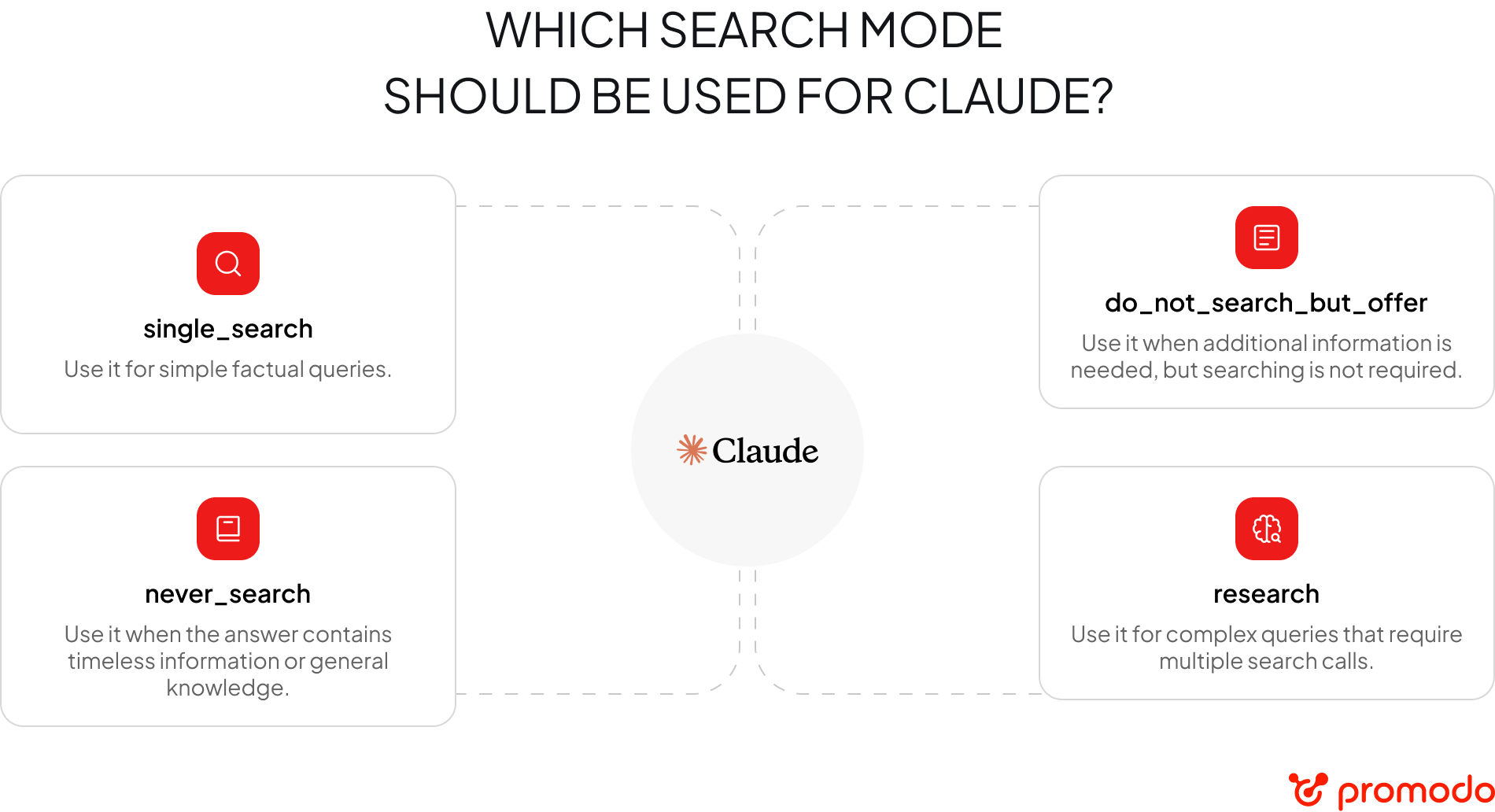

At the end of June 2025, a leak of internal documentation from the Claude AI system was announced. Well-known Western experts, Hans Kronenberg and Aleyda Solis, analyzed the information and highlighted key takeaways for all of us.

Key Findings:

“LLMs don’t just search — they reason. You need to “own expertise” to appear in reasoning models. Deep search = your deep expertise”.

Crystal Carter, SMX Advanced 2025 conference

What this information means for us as SEO specialists:

Another researcher, Jérôme Salomon, actively pressed the ChatGPT support team to uncover details about how its search works. We now know that the process happens in the following stages:

The author of the study asked ChatGPT support how the system chooses URLs from the long list of search results — and they provided an answer:

“The decision about which pages to scan is primarily influenced by the relevance of the title, the content in the snippet, the freshness of the information, and the reliability of the domain.”

— ChatGPT Support

If you’d like to see for yourself how ChatGPT selects its sources when generating an answer, here’s a quick guide:

What you will see in the JSON response:

Note: At the time of writing, it was only possible to access one parameter — “search_result_groups.” But here another expert comes into play — Mark Williams-Cook.

Also, read the article about Optimizing for AI Search: A Practical Guide for Travel Agencies.

The first thing to note: in traditional SEO, we optimized an entire website page, but LLMs are not interested in the whole page or all of its content — only in specific parts of the content, known as chunks.

Chunks are small, self-contained pieces of content that come together to form a larger article or page.

To ensure your content appears in AI responses, it’s important to consider this new structure of interaction with texts optimized for LLMs. This means you need to create short, logically complete text fragments that can be easily integrated into AI-generated answers.

Also read How Doctors Can Use AI and SEO to Win in AI Search Results

A chunk is a separate, logically complete text fragment of about ~100–300 tokens (75–225 words) that an LLM (ChatGPT, Claude, Gemini) can extract, analyze, and use when generating its response.

These fragments aren’t “assembled manually” — they are processed automatically. If you want to appear in an AI answer, you need to create short, logically complete ideas.

Even with massive context windows (GPT-4 Turbo — 128K tokens, Gemini 1.5 — up to 2M), these systems still work with individual semantic parts, not the entire text.

Structure your text into chunks. To do this, first consider the following points:

An entity is a person, object, place, or any other concept that search engines and LLMs can understand.

Embedding algorithms require each chunk to have a clear, consistent meaning.

We live in the era of open-book AI retrieval. What’s needed is high-quality, semantically structured content with clear topics and chunks.

- Dawn Anderson, SMX Advanced 2025 Conference

Example:

Today, LLMs are still in the process of development and constantly evolving. All LLMs share many similarities, but they follow different paths of evolution. Some AIs rely more on semantic analysis, while others use manual configurations and take into account a range of additional factors that are independent of content quality. That’s why what works for one AI search system may not yield results in another.

You may also like

Choose quality and trusted services to improve the presence of your company on the Internet, and feel free to contact our UK team if you have any questions.

GPTSearch capabilities allow users to receive more accurate, personalized, and detailed responses thanks to artificial intelligence.

For any business, retaining and engaging users on their website is crucial. The longer a user stays on your site, the higher the chance they will purchase.

Assuming you’re on the receiving end of the story, do you know what your SEO expert should provide in these reports?

In this article, top SEO experts at Promodo explored the nuts and bolts of SEO for travel agencies.

We at Promodo are ready to help you improve your performance across all digital marketing channels.

Get started